LOGISTIC REGRESSION

Logistic Regression is a Machine Learning classification algorithm that is used to predict the probability of a categorical dependent variable. In logistic regression, the dependent variable is a binary variable that contains data coded as 1 (yes) or 0(no).It is a special case of linear regression where the target variable is categorical in nature. It uses a log of odds as the dependent variable.

Linear Regression Equation:

y= b0 + b1X1 +b2X2 +......bnXn

Where, y is dependent variable and X1, X2 ...

and Xn are explanatory variables.

Sigmoid Function:

p=1/1+e-y

Apply Sigmoid function on linear regression:

p=1/1+e-(b0 +

b1X1 + b2X2……bnXn)

In order to map predicted values to probabilities, we use

the sigmoid function. The function maps any real value into another value

between 0 and 1. In machine learning, we use sigmoid to map predictions to

probabilities.

Deciding the boundary:

Our

current prediction function returns a probability score between 0 and 1. In

order to map this to a discrete class (true/false), we select a threshold value

above which we will classify values into

class 1 and below which we classify values into class 2.

p≥0.5,class=1

p<0.5,class=0

For

example, if our threshold was .5 and our prediction function returned 0.7, we

would classify this observation as positive. If our prediction was 0.2 we would

classify the observation as negative. For logistic regression with multiple

classes we could select the class with the highest predicted probability.

Building logistic regression in python

To build Logistic regression we consider the

following dataset

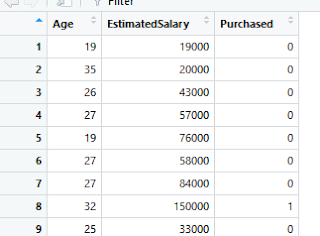

The dataset contains information of users of a social

site.It contains user_id ,gender, age, salary.It has several business clients

who can display their advertisement on the social site. One of the clients is a

car company who has just launched a luxury SUV for very high price.We will be

trying to see which user is going to buy SUV.We are going to build a model

which can predict whether the user can buy the luxury SUV.

Step1: Importing the dataset:

Output:

The dataset consists of following columns from which the prediction is based on only two variables Age and Estimated salary.We will find correlations between Age and salary and the decision to purchase.We are excluding other variables for simplicity in visualization.

Step 2:Splitting the dataset into training set and test set

Step 3:Applying Feature scaling to the dataset:

Step 4:Fitting logistic regression model in training set and predicting the test set results

Step 5:Generate confusion matrix.

A confusion matrix is a

summary of prediction results on a classification problem.The number of correct and incorrect predictions are summarized with count values.It

allows easy identification of confusion between classes e.g. one class is

commonly mislabeled as the other.

It gives us insight not

only into the errors being made by a classifier but more importantly the types

of errors that are being made.

Step5: Visualizing the training set results

Output:

Step6: Visualizing test set results

Output:

As seen in the above two graphs of training set and test set,the graph tells us about the users who bought SUV or not.The red users(dots) denotes users who didn't purchase SUV and green users who purchased SUV.Each user is characterized by its age and estimated salary.However in the graphs there are some predictions which turn out to be wrong i.e the green dots on the red region and vice-versa.This amount of incorrect is bound to happen and the number of incorrect and correct predictions can also be identified by confusion matrix.

So, main goal of building this model is classifying the right users into right category.The line between the two classes is the best fit classifier for this model.

Thanks for reading!!!